This is the multi-page printable view of this section. Click here to print.

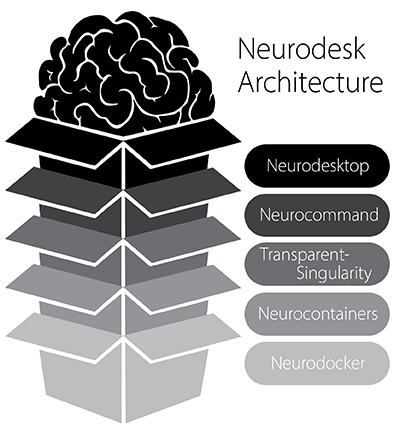

Architecture

1 - Neurodesk Architecture

Neurodesktop: Recommended for Beginners

Neurodesktop is a compact Docker container with a browser-accessible virtual desktop that allows you develop and implement data analysis, pre-equipped with basic fMRI and EEG analysis tools. To get started, see: Neurodesktop (Github)

- docker container with interface modifications

- contains tools necessary to manage workflows in sub-containers: vscode, git

- CI: builds docker image and tests if it runs; tests if CVMFS servers are OK before deployment

- CD: pushes images to github & docker registry

Neurocommand:

Neurocommand offers the option to install and manage multiple distinct containers for more advanced users who prefer a command-line interface. Neurocommand is the recommended interface for users seeking to use Neurodesk in high performance computing (HPC) environments.

To get started, see: Neurocommand (Github)

- script to install and manage multiple containers using transparent singularity on any linux system

- this repo also handles the creation of menu entries in a general form applicable to different desktop environments

- this repo can be re-used in other projects like CVL and when installing it on bare-metal workstations

- CI: tests if containers can be installed

- CD: this repo checks if containers requested in apps.json file are available on object storage and if not converts the singularity containers based on the docker containers and uploads them to object storage

transparent-singularity:

transparent-singularity offers seamless access to applications installed in neurodesktop and neurocommand, treating containerised software as native installations.

More info: transparent-singularity (Github)

- script to install neuro-sub-containers, installers are called by neurocommand

- this repo provides a way of using our containers on HPCs for large scale processing of the pipelines (including the support of SLURM and other job schedulers)

- CI: test if exposing of binaries from container works

Neurocontainers:

neurocontainers contains scripts for building sub-containers for neuroimaging data-analysis software. These containers can be used alongside neurocommand or transparent-singularity.

To get started, see: Neurocontainers (Github)

- build scripts for neuro-sub-containers

- CI: building and testing of containers

- CD: pushing containers to github and dockerhub registry

Neurodocker:

Neurodocker is a command-line program that generates custom Dockerfiles and Singularity recipes for neuroimaging and minifies existing containers.

More info: Github

- fork of neurodocker project

- provides recipes for our containers built

- we are providing pull requests back of recipes

- CI: handled by neurodocker - testing of generating container recipes

2 - Neurodesktop Release Process

Neurodesktop:

- Check if the last automated build ran OK: https://github.com/NeuroDesk/neurodesktop/actions

- Run this build date and test if everything is ok and no regression happened

- Check what changes where made since the last release: https://github.com/NeuroDesk/neurodesktop/commits/main

- Summarize the main changes and copy this to the Release History: https://www.neurodesk.org/docs/overview/release-history/

- Change the version of the latest desktop in https://github.com/NeuroDesk/neurodesk.github.io/blob/main/data/neurodesktop.toml

- Commit all changes

- Tweet a quick summary of the changes and announce new version: https://masto.ai/@Neurodesk

Neurodesk App:

Release process

Follow these steps to create a new release of the Neurodesk App.

If there’s new version of Neurodesktop image, Github Action will PR with updated jupyter_neurodesk_version in neurodesktop.toml file. Double-check and merge this PR.

Create a new release on GitHub as

pre-release. Set the releasetagto the value of target application version and prefix it withv(for examplev1.0.0for Neurodesk App version1.0.0). Enter release title and release notes. Release needs to stay aspre-releasefor GitHub Actions to be able to attach installers to the release.Make sure that application is building, installing and running properly.

In the main branch, create a branch preferably with the name

release-v<new-version>. Add a commit with the version changes in package.json file. This is necessary for GitHub Actions to be able to attach installers to the release. (for example"version": "1.0.0").GitHub Actions will automatically create installers for each platform (Linux, macOS, Windows) and upload them as release assets. Assets will be uploaded only if a release of type

pre-releasewith tag matching the Neurodesk App’s version with avprefix is found. For example, if the Neurodesk App version in the PR is1.0.0, the installers will be uploaded to a release that is flagged aspre-releaseand has a tagv1.0.0. New commits to this branch will overwrite the installer assets of the release.Once all the changes are complete, and installers are uploaded to the release then publish the release.

Update MacOS certificate

Follow these step-by-step instructions to generate and export the required Macos certificate for Neurodesk App release.

- Launch the “Keychain Access” application on your Mac, go to “Certificate Assistant.”

- Request Certificate from Certificate Authority: Within “Certificate Assistant,” select “Request a Certificate from a Certificate Authority.”

- Follow the URL to access the Apple Developer website: https://developer.apple.com/account/resources/certificates/add, upload the generated certificate.

- After uploading the certificate, download the resulting file provided by the Apple Developer website.

- Import the Certificate in Keychain.

- Right-click the imported certificate in “Keychain Access.”, choose “Export” and save it in .p12 format.

- Convert the .p12 file to Base64 using the following command:

openssl base64 -in neurodesk_certificate.p12

3 - Neurodesktop Dev

Warning

For development and testing only. Not recommended for production useBuilding neurodesktop-dev

Dev builds can be triggered by Neurodesk admins from https://github.com/NeuroDesk/neurodesktop/actions/workflows/build-neurodesktop-dev.yml

Running latest neurodesktop-dev

Linux

docker pull vnmd/neurodesktop-dev:latest

sudo docker run \

--shm-size=1gb -it --cap-add SYS_ADMIN \

--security-opt apparmor:unconfined --device=/dev/fuse \

--name neurodesktop-dev \

-v ~/neurodesktop-storage:/neurodesktop-storage \

-e NB_UID="$(id -u)" -e NB_GID="$(id -g)" \

-p 8888:8888 -e NEURODESKTOP_VERSION=dev \

vnmd/neurodesktop-dev:latest

Windows

docker pull vnmd/neurodesktop-dev:latest

docker run --shm-size=1gb -it --cap-add SYS_ADMIN --security-opt apparmor:unconfined --device=/dev/fuse --name neurodesktop -v C:/neurodesktop-storage:/neurodesktop-storage -p 8888:8888 -e NEURODESKTOP_VERSION=dev vnmd/neurodesktop-dev:latest

4 - Transparent Singularity

Transparent singularity is here https://github.com/NeuroDesk/transparent-singularity/

This project allows to use singularity containers transparently on HPCs, so that an application inside the container can be used without adjusting any scripts or pipelines (e.g. nipype).

Important: add bind points to .bashrc before executing this script

This script expects that you have adjusted the Singularity Bindpoints in your .bashrc, e.g.:

export SINGULARITY_BINDPATH="/gpfs1/,/QRISdata,/data"

This gives you a list of all tested images available in neurodesk:

https://github.com/NeuroDesk/neurocommand/blob/main/cvmfs/log.txt

curl -s https://raw.githubusercontent.com/NeuroDesk/neurocommand/main/cvmfs/log.txt

Clone repo into a folder with the intended image name

git clone https://github.com/NeuroDesk/transparent-singularity convert3d_1.0.0_20210104

Install

This will create scripts for every binary in the container located in the $DEPLOY_PATH inside the container. It will also create activate and deactivate scripts and module files for lmod (https://lmod.readthedocs.io/en/latest)

cd convert3d_1.0.0_20210104

./run_transparent_singularity.sh convert3d_1.0.0_20210104

Options for Transparent singularity:

--storage- this option can be used to force a download from docker, e.g.:--storage docker--container- this option can be used to explicitly define the container name to be downloaded--unpack- this will unpack the singularity container so it can be used on systems that do not allow to open simg / sif files for security reasons, e.g.:--unpack true--singularity-opts- this will be passed on to the singularity call, e.g.:--singularity-opts '--bind /cvmfs'

Use in module system LMOD

Add the module folder path to $MODULEPATH

Manual activation and deactivation (in case module system is not available). This will add the paths to the .bashrc

Activate

source activate_convert3d_1.0.0_20210104.sh

Deactivate

source deactivate_convert3d_1.0.0_20210104.sif.sh

Uninstall container and cleanup

./ts_uninstall.sh

5 - Neurodesk CVMFS

5.1 - Setup CVMFS Proxy

If you want more speed in a region one way could be to setup another Stratum 1 server or a proxy. We currently don’t run any proxy servers but it would be important for using it on a cluster.

Setup a CVMFS proxy server

sudo yum install -y squid

Open the squid.confand use the following configuration

sudo vi /etc/squid/squid.conf

# List of local IP addresses (separate IPs and/or CIDR notation) allowed to access your local proxy

#acl local_nodes src YOUR_CLIENT_IPS

# Destination domains that are allowed

acl stratum_ones dstdomain .neurodesk.org .openhtc.io .cern.ch .gridpp.rl.ac.uk .opensciencegrid.org

# Squid port

http_port 3128

# Deny access to anything which is not part of our stratum_ones ACL.

http_access allow stratum_ones

# Only allow access from our local machines

#http_access allow local_nodes

http_access allow localhost

# Finally, deny all other access to this proxy

http_access deny all

minimum_expiry_time 0

maximum_object_size 1024 MB

cache_mem 128 MB

maximum_object_size_in_memory 128 KB

# 5 GB disk cache

cache_dir ufs /var/spool/squid 5000 16 256

sudo squid -k parse

sudo systemctl start squid

sudo systemctl enable squid

sudo systemctl status squid

sudo systemctl restart squid

Then add the proxy to the cvmfs config:

CVMFS_HTTP_PROXY="http://proxy-address:3128"

5.2 - CVMFS architecture

We store our singularity containers unpacked on CVMFS. We tried the DUCC tool in the beginning, but it was causing too many issues with dockerhub and we were rate limited. The script to unpack our singularity containers is here: https://github.com/NeuroDesk/neurocommand/blob/main/cvmfs/sync_containers_to_cvmfs.sh

It gets called by a cronjob on the CVMFS Stratum 0 server and relies on the log.txt file being updated via an action in the neurocommand repository (https://github.com/NeuroDesk/neurocommand/blob/main/.github/workflows/upload_containers_simg.sh)

The Stratum 1 servers then pull this repo from Stratum 0 and our desktops mount these repos (configured here: https://github.com/NeuroDesk/neurodesktop/blob/main/Dockerfile)

The startup script (https://github.com/NeuroDesk/neurodesktop/blob/main/config/jupyter/before_notebook.sh) sets up CVMFS and tests which server is fastest during the container startup.

This can also be done manually:

sudo cvmfs_talk -i neurodesk.ardc.edu.au host info

sudo cvmfs_talk -i neurodesk.ardc.edu.au host probe

cvmfs_config stat -v neurodesk.ardc.edu.au

5.3 - Setup Stratum 0 server

Setup a Stratum 0 server:

Setup Storage

(would object storage be better? -> see comment below under next iteration ideas)

lsblk -l

sudo mkfs.ext4 /dev/vdb

sudo mkdir /storage

sudo mount /dev/vdb /storage/ -t auto

sudo chown ec2-user /storage/

sudo chmod a+rwx /storage/

sudo vi /etc/fstab

/dev/vdb /storage auto defaults,nofail 0 2

Setup server

sudo yum install vim htop gcc git screen

sudo timedatectl set-timezone Australia/Brisbane

sudo yum install -y https://ecsft.cern.ch/dist/cvmfs/cvmfs-release/cvmfs-release-latest.noarch.rpm

sudo yum install -y cvmfs cvmfs-server

sudo systemctl enable httpd

sudo systemctl restart httpd

# sudo systemctl stop firewalld

# restore keys:

sudo mkdir /etc/cvmfs/keys/incoming

sudo chmod a+rwx /etc/cvmfs/keys/incoming

cd connections/cvmfs_keys/

scp neuro* ec2-user@203.101.226.164:/etc/cvmfs/keys/incoming

sudo mv /etc/cvmfs/keys/incoming/* /etc/cvmfs/keys/

#backup keys:

#mkdir cvmfs_keys

#scp opc@158.101.127.61:/etc/cvmfs/keys/neuro* .

sudo cvmfs_server mkfs -o $USER neurodesk.ardc.edu.au

cd /storage

sudo mkdir -p cvmfs-storage/srv/

cd /srv/

sudo mv cvmfs/ /storage/cvmfs-storage/srv/

sudo ln -s /storage/cvmfs-storage/srv/cvmfs/

cd /var/spool

sudo mkdir /storage/spool

sudo mv cvmfs/ /storage/spool/

sudo ln -s /storage/spool/cvmfs .

cvmfs_server transaction neurodesk.ardc.edu.au

cvmfs_server publish neurodesk.ardc.edu.au

sudo vi /etc/cron.d/cvmfs_resign

0 11 * * 1 root /usr/bin/cvmfs_server resign neurodesk.ardc.edu.au

cat /etc/cvmfs/keys/neurodesk.ardc.edu.au.pub

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAuV9JBs9uXBR83qUs7AiE

nSQfvh6VCdNigVzOfRMol5cXsYq3cFy/Vn1Nt+7SGpDTQArQieZo4eWC9ww2oLq0

vY1pWyAms3Y4i+IUmMbwNifDU4GQ1KN9u4zl9Peun2YQCLE7mjC0ZLQtLM7Q0Z8h

NwP8jRJTN+u8mRKzkyxfSMLscVMKhm2pAwnT1zB9i3bzVV+FSnidXq8rnnzNHMgv

tfqx1h0gVyTeodToeFeGG5vq69wGZlwEwBJWVRGzzr+a8dWNBFMJ1HxamrBEBW4P

AxOKGHmQHTGbo+tdV/K6ZxZ2Ry+PVedNmbON/EPaGlI8Vd0fascACfByqqeUEhAB

dQIDAQAB

-----END PUBLIC KEY-----

Next iteration of this:

use object storage?

- current implementation uses block storage, but this makes increasing the volume size a bit more work

- we couldn’t get object storage to work on Oracle as it assumes AWS S3 -> Try again on AWS

Optimize settings for repositories for Container Images

from the CVMFS documentation: Repositories containing Linux container image contents (that is: container root file systems) should use overlayfs as a union file system and have the following configuration:

CVMFS_INCLUDE_XATTRS=true

CVMFS_VIRTUAL_DIR=true

Extended attributes of files, such as file capabilities and SElinux attributes, are recorded. And previous file system revisions can be accessed from the clients.

Currently not used

We tested the DUCC tool in the beginning, but it was leading to too many docker pulls and we therefore replaced it with our own script: https://github.com/NeuroDesk/neurocommand/blob/main/cvmfs/sync_containers_to_cvmfs.sh

This is the old DUCC setup

sudo yum install cvmfs-ducc.x86_64

sudo -i

dnf install -y yum-utils

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

dnf install docker-ce docker-ce-cli containerd.io

systemctl enable docker

systemctl start docker

docker version

docker info

# leave root mode

sudo groupadd docker

sudo usermod -aG docker $USER

sudo chown root:docker /var/run/docker.sock

newgrp docker

vi convert_appsjson_to_wishlist.sh

export DUCC_DOCKER_REGISTRY_PASS=configure_secret_password_here_and_dont_push_to_github

cd neurodesk

git pull

./gen_cvmfs_wishlist.sh

cvmfs_ducc convert recipe_neurodesk_auto.yaml

cd ..

chmod +x convert_appsjson_to_wishlist.sh

git clone https://github.com/NeuroDesk/neurodesk/

# setup cron job

sudo vi /etc/cron.d/cvmfs_dockerpull

*/5 * * * * opc cd ~ && bash /home/opc/convert_appsjson_to_wishlist.sh

#vi recipe.yaml

##version: 1

#user: vnmd

#cvmfs_repo: neurodesk.ardc.edu.au

#output_format: '$(scheme)://$(registry)/vnmd/thin_$(image)'

#input:

#- 'https://registry.hub.docker.com/vnmd/tgvqsm_1.0.0:20210119'

#- 'https://registry.hub.docker.com/vnmd/itksnap_3.8.0:20201208'

#cvmfs_ducc convert recipe_neurodesk.yaml

#cvmfs_ducc convert recipe_unpacked.yaml

5.4 - Setup Stratum 1 server

The stratum 1 servers for the desktop are configured here: https://github.com/NeuroDesk/neurodesktop/blob/main/Dockerfile

If you want more speed in a region one way could be to setup another Stratum 1 server or a proxy.

Setup a Stratum 1 server (This setup works best on Rocky Linux 9):

sudo yum install -y https://ecsft.cern.ch/dist/cvmfs/cvmfs-release/cvmfs-release-latest.noarch.rpm

sudo yum install -y cvmfs-server squid tmux

sudo yum install -y python3-mod_wsgi

sudo dnf install dnf-automatic -y

sudo systemctl enable dnf-automatic-install.timer

sudo systemctl status dnf-automatic-install

sudo systemctl cat dnf-automatic-install.timer

sudo vi /etc/dnf/automatic.conf

# check if automatic updates are downloaded and applied

tmux new -s cvmfs

# return to session: tmux a -t cvmfs

sudo sed -i 's/Listen 80/Listen 127.0.0.1:8080/' /etc/httpd/conf/httpd.conf

set +H

echo "http_port 80 accel" | sudo tee /etc/squid/squid.conf

echo "http_port 8000 accel" | sudo tee -a /etc/squid/squid.conf

echo "http_access allow all" | sudo tee -a /etc/squid/squid.conf

echo "cache_peer 127.0.0.1 parent 8080 0 no-query originserver" | sudo tee -a /etc/squid/squid.conf

echo "acl CVMFSAPI urlpath_regex ^/cvmfs/[^/]*/api/" | sudo tee -a /etc/squid/squid.conf

echo "cache deny !CVMFSAPI" | sudo tee -a /etc/squid/squid.conf

echo "cache_mem 128 MB" | sudo tee -a /etc/squid/squid.conf

sudo systemctl start httpd

sudo systemctl start squid

sudo systemctl enable httpd

sudo systemctl enable squid

#YOU NEED TO ADD YOUR GEO IP data here!

echo 'CVMFS_GEO_ACCOUNT_ID=APPLY_FOR_ONE_THIS_IS_a_SIX_DIGIT_NUMBER' | sudo tee -a /etc/cvmfs/server.local

echo 'CVMFS_GEO_LICENSE_KEY=APPLY_FOR_ONE_THIS_IS_a_password' | sudo tee -a /etc/cvmfs/server.local

sudo chmod 600 /etc/cvmfs/server.local

sudo mkdir -p /etc/cvmfs/keys/ardc.edu.au/

echo "-----BEGIN PUBLIC KEY-----

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAwUPEmxDp217SAtZxaBep

Bi2TQcLoh5AJ//HSIz68ypjOGFjwExGlHb95Frhu1SpcH5OASbV+jJ60oEBLi3sD

qA6rGYt9kVi90lWvEjQnhBkPb0uWcp1gNqQAUocybCzHvoiG3fUzAe259CrK09qR

pX8sZhgK3eHlfx4ycyMiIQeg66AHlgVCJ2fKa6fl1vnh6adJEPULmn6vZnevvUke

I6U1VcYTKm5dPMrOlY/fGimKlyWvivzVv1laa5TAR2Dt4CfdQncOz+rkXmWjLjkD

87WMiTgtKybsmMLb2yCGSgLSArlSWhbMA0MaZSzAwE9PJKCCMvTANo5644zc8jBe

NQIDAQAB

-----END PUBLIC KEY-----" | sudo tee /etc/cvmfs/keys/ardc.edu.au/neurodesk.ardc.edu.au.pub

sudo cvmfs_server add-replica -o $USER http://stratum0.neurodesk.cloud.edu.au/cvmfs/neurodesk.ardc.edu.au /etc/cvmfs/keys/ardc.edu.au

# CVMFS will store everything in /srv/cvmfs so make sure there is enough space or create a symlink to a bigger storage volume

# e.g.:

#cd /storage

#sudo mkdir -p cvmfs-storage/srv/

#cd /srv/

#sudo mv cvmfs/ /storage/cvmfs-storage/srv/

#sudo ln -s /storage/cvmfs-storage/srv/cvmfs/ -->

sudo cvmfs_server snapshot neurodesk.ardc.edu.au

#If this keeps failing with errors like "Processing chunks [21605 registered chunks]: failed to download #http://stratum0.neurodesk.cloud.edu.au/cvmfs/neurodesk.ardc.edu.au/data/03/99e1faa88d0d66a8707cdecdc9b063cc527e50 (17 - host data transfer cut short)

#couldn't reach Stratum 0 - please check the network connection

#terminate called after throwing an instance of 'ECvmfsException'

# what(): PANIC: /home/sftnight/jenkins/workspace/CvmfsFullBuildDocker/CVMFS_BUILD_ARCH/docker-x86_64/CVMFS_BUILD_PLATFORM/cc9/build/BUILD/cvmfs-2.13.0/cvmfs/swissknife_pull.cc : 286

#Download error

#Aborted (core dumped)"

#Then this is a deep packet inspection issue on the side of the stratum 1. To get around this, create an SSH tunnel to the stratum 0 server and transfer via that tunnel:

#ssh -L 8081:localhost:80 ec2-user@stratum0.neurodesk.cloud.edu.au

#sudo vi /etc/cvmfs/repositories.d/neurodesk.ardc.edu.au/server.conf

# change this:

#CVMFS_STRATUM0=http://stratum0.neurodesk.cloud.edu.au/cvmfs/neurodesk.ardc.edu.au

# to this:

#CVMFS_STRATUM0=http://localhost:8081/cvmfs/neurodesk.ardc.edu.au

# Then run the sync again

echo "/var/log/cvmfs/*.log {

weekly

missingok

notifempty

}" | sudo tee /etc/logrotate.d/cvmfs

echo '*/5 * * * * root output=$(/usr/bin/cvmfs_server snapshot -a -i 2>&1) || echo "$output" ' | sudo tee /etc/cron.d/cvmfs_stratum1_snapshot

sudo yum install iptables

sudo iptables -t nat -A PREROUTING -p tcp -m tcp --dport 80 -j REDIRECT --to-ports 8000

sudo systemctl disable firewalld

sudo systemctl stop firewalld

# make sure that port 80 is open in the real firewall

sudo cvmfs_server update-geodb

#test

curl --head http://YOUR_IP_OR_DNS/cvmfs/neurodesk.ardc.edu.au/.cvmfspublished

6 - Project Roadmap

All things we are currently working on and are planning to do are listed here: https://github.com/orgs/NeuroDesk/projects/9/views/4

The larger themes and subthemes are:

Streamlining container build and release process

Improving the workflow of how users can add new applications to Neurodesk

Adding new applications to Neurodesk requires multiple steps and back-and-forth between contributors and maintainers. We are aiming to simplify this process by developing an interactive workflow based on our current interactive container builder and the existing github workflows.

Some issues in this theme are:

Standardizing the container deployment

Currently, deploying the application containers happens through a connection of various custom scripts distributed across various repositories (apps.json in neurocommand repository, neurocontainers, transparent singularity). We would like to adopt community standard tools, like SHPC, that can perform some of these tasks. The goal is to remove duplication of effort and maintenance.

Some issues in this theme are:

- https://github.com/NeuroDesk/transparent-singularity/issues/7

- https://github.com/NeuroDesk/neurocommand/issues/152

- https://github.com/NeuroDesk/transparent-singularity/issues/8

- https://github.com/NeuroDesk/neurocommand/issues/187

- https://github.com/NeuroDesk/neurocontainers/issues/504

Reuse and citability of containers

Currently, there is no good way of describing and citing the individual software containers. We want to increase the reusability and citability of the software containers.

Some issues in this theme are:

- https://github.com/NeuroDesk/neurocontainers/issues/218

- https://github.com/NeuroDesk/neurocontainers/issues/217

- https://github.com/NeuroDesk/neurocontainers/issues/142

- https://github.com/NeuroDesk/neurocommand/issues/212

Improving user experience

Improving documentation

We would love to have more tutorials and examples that help people perform Neuroimaging analyses in Neurodesk. When we developed our current documentation system (https://www.neurodesk.org/tutorials-examples/), we wanted to develop an interactive documentation system that ensures that examples always work correctly because they are automatically tested. We have a first proof-of-concept that runs jupyter notebooks and converts them to a website: https://www.neurodesk.org/example-notebooks/intro.html - but currently errors are not flagged automatically and it needs manual checking.

Some issues in this theme are:

- https://github.com/NeuroDesk/neurodesk.github.io/issues/54

- https://github.com/NeuroDesk/neurodesk.github.io/issues/373

- https://github.com/NeuroDesk/neurodesk.github.io/issues/37

Facilitating the use of Neurodesk in teaching and workshops

Neurodesk is a great fit for teaching Neuroimaging methods. Currently, however, it’s not easy to run a custom Neurodesk instance for a larger group. We would like to make it easier for users to deploy Neurodesk for classes and workshops with a shared data storage location and we would love to support advanced features for cost saving (e.g. autoscaling, support of ARM processors) on various cloud providers (e.g. Google Cloud, Amazon, Azure, OpenStack, OpenShift).

Support of advanced workflows

Deeper Integration of containers and jupyter notebook system

We want to integrate the software containers deeper into the jupyter notebook system. Ideally, it is possible to use a jupyter kernel from within a software container.

Some issues in this theme are:

Support of scheduling workflows

Currently, all Neurodesk work is entirely interactive. We want to add a way of scheduling workflows and jobs so that larger computations can be managed efficiently.

Some issues in this theme are: